This is one of those “I could have sworn I blogged on this topic years ago” posts. I speak to this topic whenever I’m presenting on SSIS development best practices, and with my half-day mini-seminar in Stockholm last week[1] I was thinking of it – and speaking about it - again. Although SSIS is a great platform and provides pretty much all the tools you need to get the job done, sometimes it’s not always easy to know what tools to use and how to use them.

Which brings us, of course, to package templates. So before we continue, please read this Knowledge Base article on how to create a package template in BIDS.

Done? Good – let’s review, and paraphrase while we do it.

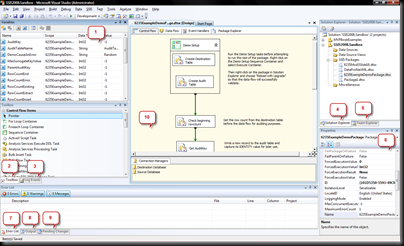

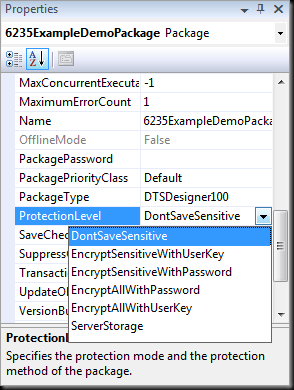

Package templates are pretty much the entire reuse story in SSIS.[2] You define the baseline package, including connection managers, log providers, configurations, variables and expressions, tasks, containers and precedence constraints and sometimes data flow components as well. When you create a new package you create a new copy of the template with this pre-defined starting point instead of starting with a blank package, so you can be sure that the package is just like the baseline you created.

In keeping with the theme established in the post title, this is the “good” side of SSIS package templates. They’re valuable, and when you’re building a large number of packages for the ETL subsystem of a DW/BI project, they’re invaluable, in that the common “plumbing” required by all packages can be defined once and used consistently everywhere. And this is particularly vital when working in a team environment, where different developers (each of whom inherently has his own style and own way of building packages) are working on the same project, which is typically the case on ETL projects of anything but the most trivial in scale.

Which brings us to the “bad” side of package templates, at least as implemented when following the guidance in the KB article referenced above. This guidance is firmly targeted at the lone developer; did you notice how all the instructions about creating a package and saving it in the Visual Studio install folder never mentioned on what computer you should be performing these steps? That’s because the article assumes the context is “your machine” – the lone developer’s machine or - at scale – on every developer’s machine.

Why is this bad, you ask? Surely it can’t be so much work to copy a DTSX file to the same folder on a few computers, can it?

Of course not. The “bad” side of following this approach comes not when creating the templates, but when the real world comes into play and the project continues to grow and evolve. In a perfect world, when the needs of the project are understood 100% before development begins, and the needs of the project never change after development begins, this approach will work just fine for team development. Your mileage may vary, but I’ve yet to work an SSIS project[3] like this.

In the real world, most successful BI projects are iterative and agile, just like most other successful development projects. If you look at SQL Server MVP John Welch or MVP and VSTS Ranger Ted Malone, their blogs focus strongly on agile BI. If you read Kimball, you will see an overarching theme of agile and iterative development. And if you’ve ever seen former SSIS program manager Michael Entin present on SSIS performance tuning[4] you’ve noticed this best practice under the heading of “Principles of Good Package Design”: “Follow Microsoft Development Guidelines - Iterative design, development & testing.”

Ok, so why did I just go off on that tangent and drop so many names? Regular readers will quickly note that my writing style practically revolves around tangents and will likely seize on this as the reason, but today it is to drive home a point: The real world is neither pretty nor neat, and people who know more about BI than I will ever forget[5] have gone out of their way to point this out.

Why is this bad, you ask yet again? Surely it can’t be so much work to copy a DTSX file to the same folder on a few computers, can it? And can you please just get to the point, Matthew?

Maybe. Picture this story:

A small team of five or six SSIS developers are working together on a project. The lead developer has created a package template for all developers on the team to use moving forward. He emails the DTSX file to each team member along with a reference to KB article 908018 for instructions on how to install and use the template.

A week later the lead developer identifies a problem with the package template.[6] He updates the template package and emails it out to his team with instructions to update their local machines with the new package right away.

Unfortunately, one of the team members is a “heads-down” kind of developer who turns off email during the day, and finishes a few more packages before he gets the message, even though he does save the new template package once he checks his email.

And another developer is on vacation when the email is sent, and by the time he gets back he has too many new messages in his inbox to ever get through, so he decides that if anything is really important then the sender will re-send it, and he just deletes anything that is unread to get a fresh start with no email backlog.[7] So he keeps on developing packages using the outdated template until the next update is sent out a few weeks later…

You see where this is going, right? This is gong to the “ugly” side of SSIS package templates. The ugly part comes in downstream when integration testing begins. The packages developed by the individual team members need to be deployed into a test environment and tested together, and of course at that point things start to fall apart. Everyone works extra hours and deadlines slip by while people try to track down problems they knew had been solved long ago. Although to be honest, the worst case scenario is that the test cases don’t find the problem at this point, and the ETL solution is deployed into production without the errors being resolved.

Unfortunately, I have seen this story play out in one way or another on several different projects over the years. The details are always different, but the ending is always the same: extra work, unhappy clients, and pain.

Now picture this story retold, with a few different details:

The lead developer works with his team to establish Team Foundation Server (TFS) source code control[8] before any development begins. He develops a set of packages that serve as “template examples” and checks them into TFS.

He also establishes standards with his team, so that each team member knows to Get Latest whenever starting work on a new package. This ensures that – no matter what happens – each developer always has the most recent versions of all packages with no need for “special case” tasks like updating a template.

The lead developer also instructs each development team member to create any new packages by right-clicking in Solution Explorer and selecting Add Existing Package, and then browsing to a current file from TFS.[9] This ensures that whenever a new package is created, it is being created as a new instance of a “rolling template” that is stored in TFS and as such the same version is always available for use by all developers.

Then, whenever problems are identified in existing packages, the “problem packages” are updated immediately, and the fixes are applied to any existing packages created from a now-outdated template.[10]

And whenever anyone goes on vacation, problems are found earlier, their impact is lessened, deadlines are met, and everyone lives happily ever after.

Ok, so the results are probably not so extreme, but in my experience on multiple projects this “rolling template” approach is the best practice way to approach SSIS package templates. Any attempt to utilize the Microsoft guidance in a team environment runs into problem after problem, and at the worst extreme I have seen a development team manually re-implement dozens of SSIS packages using a new template after churning for a full week while trying to reconcile and resolve the problems disseminated by using the “official” template approach.

And to be fair, the definition and implementation of policies and procedures can help make following the “official” approach less bad, but the starting point is inherently flawed for team development. If anyone were to suggest to me that following KB 908018 was the right way to approach package templates, I would have to politely[11] disagree.

What do you think? Even though I have been around the block a few times with SSIS, I don’t claim to know it all. I’d love to hear your thoughts on how you use package templates – especially if I took your name in vain earlier on - including what works and what does not work in your team environment.

[1] I never seem to find the time to do tourist stuff when traveling for work, but for some reason I just could not say no when I was invited to speak on SSIS best practices when in Sweden for a friend’s wedding. I have the world’s most understanding wife.

[2] I say “pretty much” because using small, modular packages and executing them via the Execute Package task or some other client, or using the SSIS .NET API to dynamically construct and execute packages are the other parts. In SQL Server 2008 and SQL Server 2008 there is not a lot to say about reuse in SSIS.

[3] Or projects using any other technology, for that matter. If you know of any projects like this and are looking for a consultant, I’m pretty sure I can work out a moonlighting arrangement with Microsoft. ;-)

[4] Or have seen me blatantly plagiarize his slide decks for my own talks.

[5] No Ted, this doesn’t include you. Sorry.

[6] I won’t go into the details of the problem because it isn’t really important to the story, but it could be anything along the lines of:

a) A missing variable, which needed to be added.

b) A variable defined at an incorrect scope, hiding a variable at the package scope when accessed from a Script task.

c) A log provider needed to be added to support new project requirements.

You get the idea, right?

[7] Yes, people really do this. Yes, I’m thinking about doing it right now. I’ve been on vacation for a week and have been checking email at least once a day to respond to high priority items, but I still have nearly 700 unread messages in my inbox. ;-(

[8] Or Visual SourceSafe or pretty much any modern SCC system. But TFS has so many additional benefits, it’s hard to recommend anything else.

[9] Just to be clear, I’m not implying that there is any ability to browse TFS repositories in BIDS, but instead am saying that the developers all have the most recent packages from TFS and can browse to them on their local file systems.

[10] Odds are they use a tool like PacMan to batch update the existing packages. ;-)

[11] Or perhaps not so politely depending on the hour…