No, wait, that's another post. Please let me begin again.

If you're like me, you tend to stay on the cutting edge, upgrading to the latest and greatest development tools as soon as they're available.

Yes, that's the right post.

The great things about this tendency are that you get new features and functionality early, you are constantly learning new things, and when the products are actually released you already have months experience using them, so not only do you have a competitive edge, you can also help others who are less adventurous learn things too. All around it's a real win-win scenario.

But it is not without its pain.

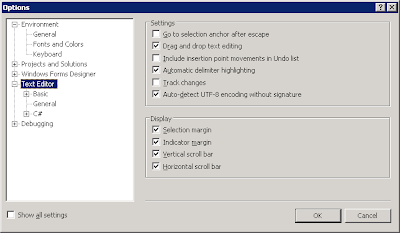

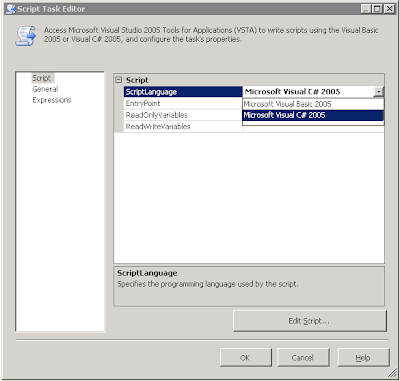

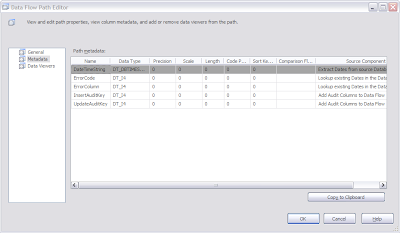

Visual Studio 2008 has recently been released, with important (and cool) new capabilities for people developing Windows and web applications using VB.NET, C# and other "traditional" development tasks. But what about us Business Intelligence developers? Well, do you remember that song from the opening of "Four Weddings and a Funeral?"[1] Well, we've been left out in the cold, like Hugh Grant. The current CTP5 release of SQL Server 2008 still relies on Visual Studio 2005, so if you want to get the goodness of Visual Studio 2008 when building SSIS packages, you're out of luck.

...but like with Hugh Grant, there is a happy ending in sight. Michael Entin, a member of the SSIS product team, posted this information today on the SSIS Forums on MSDN:

1) SSIS 2005 requires VS 2005, and will not work with VS 2008.

2) SSIS 2008 RTM will work with VS 2008 (and only with VS 2008 - there will be no option to use it from VS 2005).

3) It is not final yet when this will be implemented, most likely the next CTP will use VS 2005, and there will be a refresh that provides VS 2008 support.

Michael was careful to point out these these plans are tentative and subject to change, but it's still exciting to know that there is a plan and a roadmap for getting the tools we want hooked up with the BI projects we need and love. Now let's just hope that Visual Studio doesn't marry a rich old Scottish gentleman before then...

[1] In case you missed that one, it was "But Not For Me" by Elton John and went "they're singing songs of love, but not for me..."